We compared Chat GPT 5 Thinking and Gemini 2.5 Pro on a simple task explained below. Incidentally, the image above was generated by Gemini (and cropped from it).

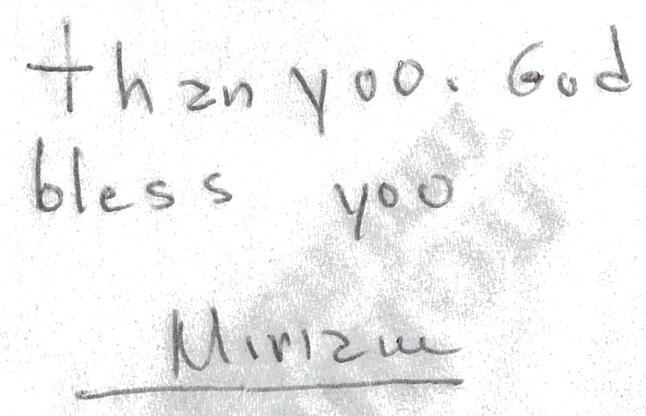

The image below is a warm message written by the housekeeping staff as a thank you for a tip when I stayed at a hotel.

Regarding what the name written at the end should be read as, a human would likely make the following inferences:

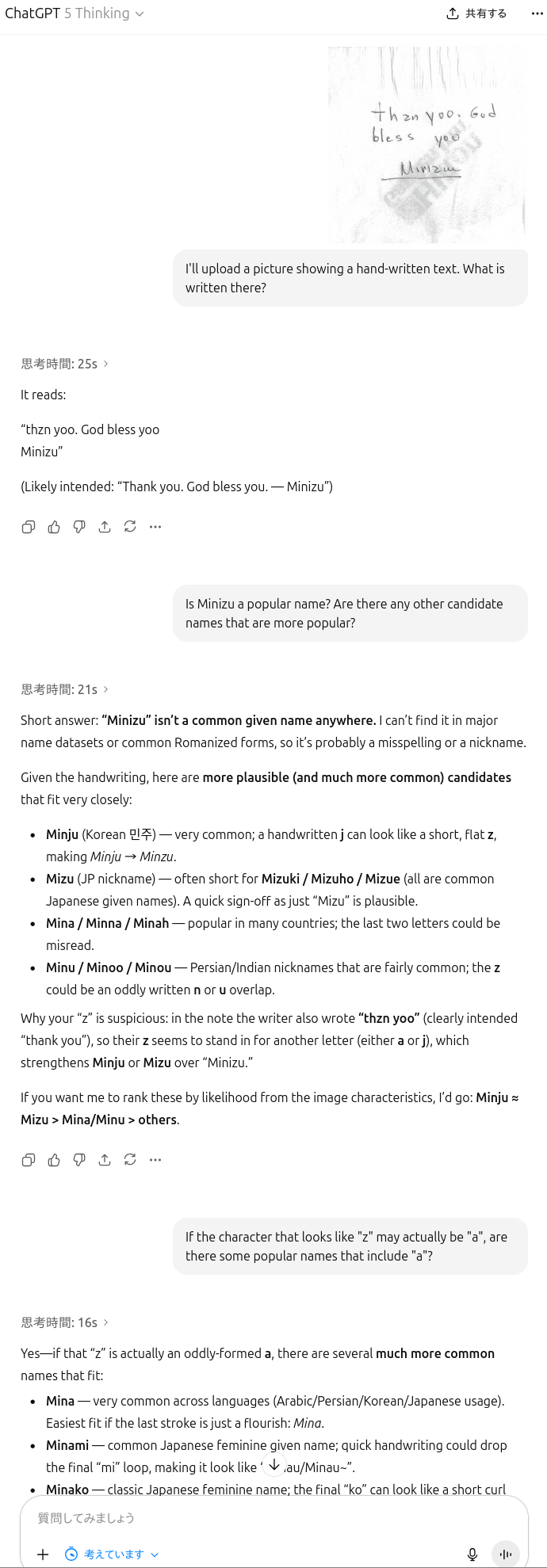

So, we gave the same task to Chat GPT 5 Thinking and Gemini 2.5 Pro.

First, Chat GPT 5 Thinking. Although we tried to guide it slightly, it did not reach the conclusion of Miriam. It did say that the letter that looks like a “z” is probably an “a,” but despite this, it initially didn’t consider the fifth letter of the name to be “a” when listing candidates. This might be because it seems to think the third letter of the name is an “n.” Could the fact that it mentions Japanese and Korean names be influenced by the query originating from a Japanese IP address? We tried asking in English to avoid this.

Next is Gemini 2.5 Pro. It’s not entirely clear whether it made the inference that the third letter of “thzn” and the fifth letter of the name are the same, but it reached the conclusion of Miriam from the start.

Ultimately, the “correct answer” to this task is unknown, but both models appear to imitate the initial human-like inference that “the letter that looks like a z is actually likely an a.”